Blog

Welcome to our blog, where we dive into captivating news articles and share exciting mini blog reports about our groundbreaking research and the events surrounding it. We’re thrilled to have a team of talented undergraduate and high school interns who are actively involved in curating and managing this platform, allowing us to connect with a wider audience. Get ready to embark on a journey of knowledge as we share our research findings and insights. Sit back, relax, and enjoy the fascinating world of science and innovation!

Jump to one of the news articles below.

- New Grant from the Massachusetts Government

- Reflections from CHI 2025 in Yokohama

- Build Your First Interactive Generative AI Website with Meta’s LLaMA Model

- Crea tu primer sitio web interactivo impulsado por IA generativa con el modelo LLaMA de Meta

- Mini-Course: Start Gig Work with Generative AI

- Navigating the Gig Economy with AI: Building a Smart Career Guidance System

- Ethical AI at Akamai

- Using AI for Peace-Building with IFIT

- Hello World Tutorial with Meta’s Llama 3.2

- Tutorial “Hello World” con Llama 3.2 de Meta

- Using Generative AI to Create Sustainable Business Plans: A Mini-Course

- Keynote Speaker at the Mexican AI Conference (MICAI)

- Workshop on AI Tools for Labor at the AAAI HCOMP Conference

- Driving the Future of Work in Mexico through Artificial Intelligence: My Experience with the Global Partnership on AI (GPAI)

- Impulsando el Futuro del Trabajo en México a través de la Inteligencia Artificial: Mi Experiencia en el Global Partnership on AI (GPAI)

- Dr. Savage’s Journey with the OECD’s Global Partnership on AI (GPAI)

- The Many Futures of Design: Shaping UX with Futures Thinking – A Journey through UXPA 2024

- Lessons on Polarization from Global Leaders at the Ford Foundation

- Insights from the Premiere Scientific Conference on HCI: CHI 2024

- Into the World of AI for Good: Reflections on My First Week in the Civic AI Lab at Northeastern

- Starting My High School Summer Internship at the Northeastern Civic AI Lab!

- Human Centered AI Live Stream: Sota Researcher!

- Designing Public Interest Tech to Fight Disinformation

- Recap: HCOMP 2022

- List of MIT Tech Inspiring Innovators

- Fighting online trolls with bots

- ¿Cómo aplicar la inteligencia artificial para eficientar los trámites de gobierno?

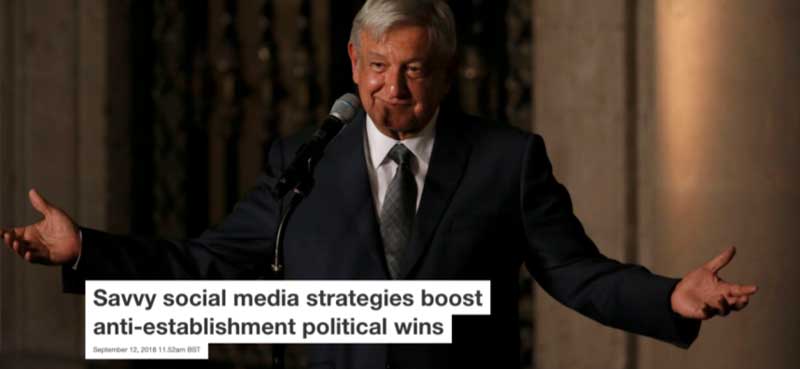

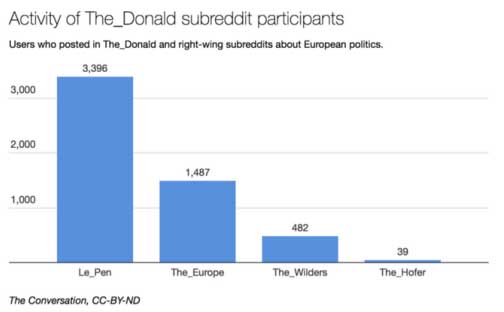

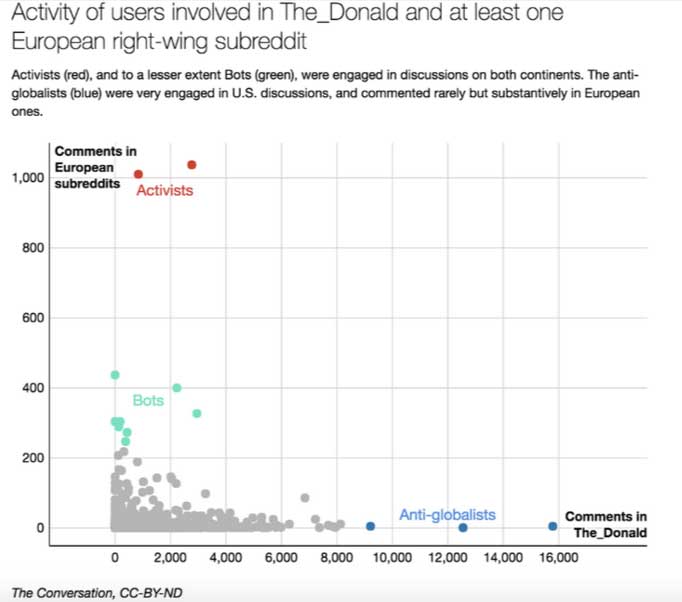

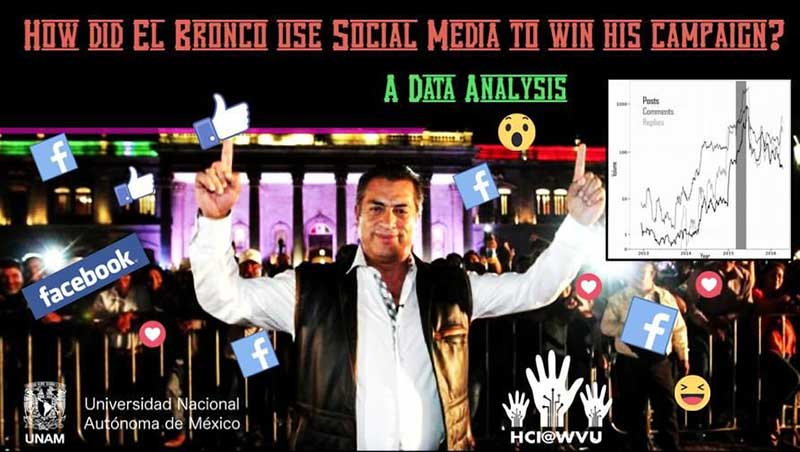

- Savvy social media strategies boost anti-establishment political wins

- Activist Bots: Helpful But Missing Human Love?

- ‘Making Europe Great Again,’ Trump’s online supporters shift attention to the French election

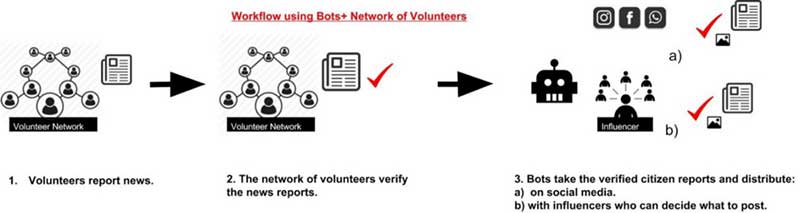

- “Countering Fake News in Natural Disasters via Bots and Citizen Crowds”

New Grant from the Massachusetts Government

Our civic AI lab has received a large grant from the Massachusetts government, specifically the Massachusetts Trial Court.

With this support, we will develop AI tools and a suite of education courses for incarcerated and formerly

incarcerated individuals.

What this grant makes possible

Our goal is to teach AI fundamentals and the use of digital labor platforms so participants can apply to jobs

online in the tech sector and across the local Massachusetts economy.

Why these skills matter

AI and digital platforms shape how work is found, evaluated, and performed. Learning how AI systems function, how to collaborate with them, and how to build a strong online work profile increases access to opportunity.

These skills help people compete for remote and local roles, grow income stability, and reduce barriers during reentry. In short, AI and digital literacy are becoming core workforce skills for Massachusetts.

Co-designed with justice-impacted leaders

Our courses were co-designed with justice-impacted team members, including Program Manager Jesse Nava, who brings lived experience from the California Department of Corrections and Rehabilitation (CDCR). Their leadership ensures the curriculum is practical, respectful, and relevant to real constraints.

When justice-impacted experts help design both the courses and the AI tools, the result is more accessible content, clearer language, and better alignment with learners’ goals. This approach also builds trust, which increases engagement and completion.

What we are building

- AI foundations: plain-language modules on how AI works and where it shows up in everyday jobs

- Digital labor platforms: hands-on guidance for creating profiles, finding gigs, managing reputation, and getting paid

- Job readiness: portfolio tips, resume building with AI assistance, and interviewing practice

- Flexible delivery: live online sessions, select in-person classes at Northeastern University, and pre-recorded tutorials that can be played on prison tablets where permitted

Looking ahead

We are grateful to the Massachusetts Trial Court for this vote of confidence. Our team is energized to deliver tangible impact for people across Massachusetts who are ready to learn, work, and thrive. We look forward to sharing milestones as the program rolls out and welcomes its first cohorts.

Reflections from CHI 2025 in Yokohama

I just returned from an unforgettable week at CHI 2025 in Yokohama, Japan, where I had the opportunity to share research, reconnect with beautiful minds in HCI, and dive into new global conversations about how we design technology that truly works for everyone. CHI (Conference on Human Factors in Computing Systems) is one of the top international conferences on human-computer interaction, bringing together researchers to explore how people interact with technology. This year’s CHI felt especially timely—with AI rapidly reshaping how we build, communicate, and even understand each other, the urgency to design with dignity, and care has never been greater.

Presenting Our Paper: Generative AI, Accessibility

Presenting Our Paper: Generative AI, Accessibility

At CHI, I presented our paper, “The Impact of Generative AI Coding Assistants on Developers Who Are Visually Impaired.” In an era where tools like GitHub Copilot and ChatGPT are transforming how developers code, we asked: What happens when these tools are used by developers who are blind or visually impaired?

Using an Activity Theory framework, our team conducted a qualitative study with blind and visually impaired developers as they worked through coding tasks using generative AI assistants. We uncovered:

- Cognitive Overload: Participants were overwhelmed by excessive AI suggestions, prompting a call for “AI timeouts” that allow users to pause or slow down AI help to regain control.

- Navigation Challenges: Screen readers made it difficult to distinguish between user-written and AI-generated code, creating context-switching barriers.

- Seamless Control: Participants expressed a desire for AI that adapts to their pace and supports their workflows, rather than taking over.

- Optimism & Friction: Despite the challenges, developers were hopeful—highlighting the importance of designing AI systems grounded in lived experience.

This work offers design implications not just for accessibility, but for how to meaningfully integrate AI into diverse real-world workflows.

CHI 2025 Paper Highlights:

CHI 2025 Paper Highlights:

NightLight: A smartphone-based system that passively collects ambient lighting data through built-in sensors to help pedestrians choose safer nighttime walking routes.

70% of users altered their routes when shown light-augmented maps—demonstrating the power of low-cost, AI-powered safety interventions.VR and Team Belonging (Mariana Fernandez, Notre Dame): A study on how VR can support inclusion in newly formed teams.

Newcomers felt significantly more accepted in VR settings compared to in-person ones, thanks to avatar anonymity and immersive design reducing social pressure.AI in Social Care (South Korea): An analysis of a real-world deployment of an LLM-powered voice chatbot used to check in on socially isolated individuals.

Contrary to expectations, the system increased workload for frontline workers due to unforeseen maintenance burdens, revealing the “invisible labor” of human-AI collaboration.Political Ideology & App Use: A U.S.-based study using structural equation modeling to show how political beliefs—not just privacy concerns—influenced adoption of COVID contact-tracing apps. People were more willing to share health data to help others than for self-protection, highlighting the deep sociopolitical layers of tech trust.Participatory GenAI for Reentry (Richard Martinez, UC Irvine): A co-design study with formerly incarcerated youth using AI for creative and entrepreneurial projects.

Participants designed novel GenAI use cases based on their lived experiences, reshaping notions of expertise in AI and spotlighting the need for infrastructure that serves the margins first.

Keynote by Mutale Nkonde: Designing AI with Curiosity and Accountability

Keynote by Mutale Nkonde: Designing AI with Curiosity and Accountability

This year’s keynote by Mutale Nkonde—AI policy researcher and founder of AI for the People—delivered a powerful critique of current AI design paradigms. Drawing from her background in journalism and digital humanities, she called for a socio-technical approach to AI that centers lived experience, history, and adaptability.

- Main Argument: AI is often misused “in the wild” because designers fail to anticipate the messy, social realities in which systems operate.

- Example: Google’s LLM generating Nazi content was framed as a failure of foresight—designers had not imagined the breadth of harm users could elicit.

- Call to Action: Combine social science frameworks with red teaming—adaptive, adversarial simulations that surface hidden risks by testing evolving AI tools in diverse cultural and political contexts.

- Critique: She acknowledged the tension between tech and social science communities, urging for mutual learning rather than an “us vs. them” stance.

Takeaway: If we want AI to serve the public good, we must not only anticipate user behavior—but also stress-test systems with communities who will be most affected.

Co-Organizing the Workshop on Explainable AI

Co-Organizing the Workshop on Explainable AI

I also co-organized the “Explainable AI in the Wild” workshop, where we asked: How do we build explainable AI that works for real people? We explored questions of power, cultural context, and transparency—moving beyond technical definitions to address who needs explanations and why.

Yokohama: A City of Reflection and Futurism

Yokohama: A City of Reflection and Futurism

Yokohama provided the perfect backdrop to reflect on global futures in tech. From stunning harbor views to the mix of tradition and innovation, it was the ideal setting for a conference rooted in community and vision.

One of the personal highlights of the trip was reconnecting with my PhD advisor, who attended my talk and offered thoughtful feedback. We also had dinner together with his lab—my academic siblings—and spent the evening exchanging stories, laughing, and reflecting on the winding paths of our research journeys. It was deeply inspiring to hear about his current work and to receive career advice grounded in years of experience navigating academia, mentoring, and interdisciplinary research.

Moments like these remind me of the value of mentorship and how much we grow by staying connected to those who helped shape our intellectual foundations. I left that dinner energized and grateful for the ongoing guidance and camaraderie in our academic lineage.

Final Thoughts

Final Thoughts

CHI 2025 reaffirmed a belief I hold deeply: the future of AI must be human-centered, justice-oriented, and designed in partnership with those most often excluded.

Our tools can either widen the gaps—or help bridge them. It’s on us to choose the latter.

Until next time, CHI.

#CHI2025 #AccessibleTech #AIForGood #ParticipatoryDesign #GenerativeAI #XAI #HumanCenteredAI #DigitalJustice #HCI #InclusionInTech #Yokohama

Build Your First Interactive Generative AI Website with Meta’s LLaMA Model

Hi friends!

In this tutorial, you’ll create a super simple website where users can type a question and get a response from an AI — just like ChatGPT, but using Meta’s LLaMA model (an open-source AI model) through Hugging Face.

You’ll learn how to:

Use an AI model

Create a basic web page

Connect it all with Python code

What Are These Tools?

What Are These Tools?

Before we start coding, let’s understand the tools we’re using:

Hugging Face

Hugging Face

Think of this as a giant library of powerful AI models (like LLaMA, GPT, etc.) that you can use in your own apps. It’s like the Netflix of AI models — just log in, choose a model, and go!

Flask (Python)

Flask (Python)

This is a mini program that turns your Python code into something that can be used on a website. It’s like the brain behind the scenes — it listens to the user’s question, sends it to the AI, and gives back the answer.

Step-by-Step Tutorial

Step-by-Step Tutorial

Step 1: Open Google Colab

Step 1: Open Google Colab

Go to Google Colab and open a new notebook.

Step 2: Install the Tools We Need

Step 2: Install the Tools We Need

!pip install flask flask-ngrok transformers torch pyngrok

Step 3: Log Into Hugging Face

Step 3: Log Into Hugging Face

- Go to https://huggingface.co

- Sign up/log in → Go to Settings > Access Tokens

- Copy your token and paste it into this code:

from huggingface_hub import login

HUGGING_FACE_KEY = "paste-your-key-here"

login(HUGGING_FACE_KEY)

Step 4: Load Meta’s LLaMA Model

Step 4: Load Meta’s LLaMA Model

from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

model_name = "meta-llama/Llama-3.2-1B"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

Step 5: Let’s Build the Backend (The Brain Behind the Website)

Step 5: Let’s Build the Backend (The Brain Behind the Website)

What’s the backend?

It’s like the chef in a restaurant. The user places an order (asks a question), and the backend prepares the response using AI.

from flask import Flask, request, jsonifyfrom flask_ngrok import run_with_ngrokapp = Flask(__name__)run_with_ngrok(app)@app.route("/ask", methods=["POST"])def ask():prompt = request.json.get("prompt", "")inputs = tokenizer(prompt, return_tensors="pt")outputs = model.generate(inputs["input_ids"], max_length=100)response = tokenizer.decode(outputs[0], skip_special_tokens=True)return jsonify({"response": response})

Step 6: Create the Web Page (Frontend)

Step 6: Create the Web Page (Frontend)

Now, let’s build the frontend — the part that people see and interact with.

%%writefile index.html<!DOCTYPE html><html><head><title>Ask AI</title></head><body><h2>Ask the AI</h2><textarea id="prompt" rows="4" cols="50" placeholder="Type your question here..."></textarea><br><button onclick="askAI()">Ask</button><p><strong>Response:</strong><span id="response"></span></p><script> async function askAI() {const prompt = document.getElementById("prompt").value;const res = await fetch("/ask", {method: "POST",headers: { "Content-Type": "application/json" },body: JSON.stringify({ prompt: prompt })});const data = await res.json();document.getElementById("response").innerText = data.response;}</script></body></html>

Step 7: Connect It All and Run the App

Step 7: Connect It All and Run the App

from flask import send_from_directory@app.route('/')def serve_frontend():return send_from_directory('.', 'index.html')app.run()

You’ll get a link like this: https://xxxxx.ngrok.io

Click it and…

Final Words

Final Words

You just built:

- A working AI assistant using Meta’s LLaMA model

- A custom web page

- A backend in Python to power it

You’re officially coding with real AI models. That’s amazing. Welcome to the world of AI development!

Crea tu primer sitio web interactivo impulsado por IA generativa con el modelo LLaMA de Meta

¡Hola amigas y amigos!

En este tutorial, vas a crear una página web súper sencilla donde las personas puedan escribir una pregunta y recibir una respuesta de una IA — ¡igual que con ChatGPT! Pero aquí usarás el modelo LLaMA de Meta (un modelo de IA de código abierto) a través de Hugging Face.

Vas a aprender a:

Usar un modelo de IA

Crear una página web básica

Conectar todo con código en Python

¿Qué herramientas vamos a usar?

¿Qué herramientas vamos a usar?

Antes de comenzar a escribir código, conozcamos las herramientas:

Hugging Face

Hugging Face

Imagina una biblioteca gigante de modelos de IA poderosos (como LLaMA, GPT, etc.) que puedes usar en tus proyectos. Es como el Netflix de los modelos de IA — ¡solo inicia sesión, elige uno y listo!

Flask (Python)

Flask (Python)

Es un programa ligero que convierte tu código en Python en una pequeña página web. Es como el cerebro que está detrás de escena — recibe la pregunta del usuario, se la pasa a la IA, y devuelve la respuesta.

Tutorial paso a paso

Tutorial paso a paso

Paso 1: Abre Google Colab

Paso 1: Abre Google Colab

Ve a Google Colab y abre un nuevo notebook.

Paso 2: Instala las herramientas necesarias

Paso 2: Instala las herramientas necesarias

!pip install flask flask-ngrok transformers torch pyngrok

Paso 3: Inicia sesión en Hugging Face

Paso 3: Inicia sesión en Hugging Face

- Visita https://huggingface.co

- Inicia sesión → Ve a Settings > Access Tokens

- Copia tu token y pégalo aquí:

from huggingface_hub import login HUGGING_FACE_KEY = "pega-tu-token-aquí" login(HUGGING_FACE_KEY)

Paso 4: Carga el modelo LLaMA de Meta

Paso 4: Carga el modelo LLaMA de Meta

from transformers import AutoTokenizer, AutoModelForCausalLM import torch model_name = "meta-llama/Llama-3.2-1B" tokenizer = AutoTokenizer.from_pretrained(model_name) model = AutoModelForCausalLM.from_pretrained(model_name)

Paso 5: Construyamos el backend (el cerebro del sitio web)

Paso 5: Construyamos el backend (el cerebro del sitio web)

¿Qué es el backend?

Es como el chef en un restaurante. La persona hace un pedido (una pregunta), y el backend prepara la respuesta usando IA.

from flask import Flask, request, jsonify from flask_ngrok import run_with_ngrok app = Flask(__name__) run_with_ngrok(app) @app.route("/ask", methods=["POST"]) def ask(): prompt = request.json.get("prompt", "") inputs = tokenizer(prompt, return_tensors="pt") outputs = model.generate(inputs["input_ids"], max_length=100) response = tokenizer.decode(outputs[0], skip_special_tokens=True) return jsonify({"response": response})

Paso 6: Crea la página web (frontend)

Paso 6: Crea la página web (frontend)

Ahora vamos a construir la parte que las personas ven e interactúan — el frontend.

%%writefile index.html <!DOCTYPE html> <html> <head><title>Pregúntale a la IA</title></head> <body> <h2>Pregúntale a la IA</h2> <textarea id="prompt" rows="4" cols="50" placeholder="Escribe tu pregunta aquí..."></textarea><br> <button onclick="askAI()">Preguntar</button> <p><strong>Respuesta:</strong> <span id="response"></span></p> <script> async function askAI() { const prompt = document.getElementById("prompt").value; const res = await fetch("/ask", { method: "POST", headers: { "Content-Type": "application/json" }, body: JSON.stringify({ prompt: prompt }) }); const data = await res.json(); document.getElementById("response").innerText = data.response; } </script> </body> </html>

Paso 7: Conecta todo y ejecuta la app

Paso 7: Conecta todo y ejecuta la app

from flask import send_from_directory @app.route('/') def serve_frontend(): return send_from_directory('.', 'index.html') app.run()

Vas a recibir un enlace como este: https://xxxxx.ngrok.io

¡Haz clic y…

¡Misión cumplida!

¡Misión cumplida!

Acabas de construir:

- Un asistente de IA funcionando con el modelo LLaMA de Meta

- Una página web personalizada

- Un backend en Python que conecta todo

¡Ya estás programando con modelos de IA reales! Bienvenida/o al mundo del desarrollo con inteligencia artificial

Mini-Course: Start Gig Work with Generative AI

Our lab is excited to share a brand-new mini-course designed to help you start your freelance journey using the power of Generative AI!

This course walks you through how to:

Identify the best freelance gigs based on your skills and cultural background

Use AI tools to find opportunities that help you earn more & work smarter

Take actionable steps to launch your freelance business

Whether you’re just starting out or looking to level up your freelance game, this course gives you the tips, tools, and mindset to succeed.

Check it out here: Watch Now

#Freelancing #GigWork #GenerativeAI #AIforWork #WorkSmarter #FreelancerTips

Navigating the Gig Economy with AI: Building a Smart Career Guidance System

By: Aishwarya Abbimutt Nagendra Kumar (Civic AI Lab Research Assistant)

Navigating the Gig Economy with AI: Building a Smart Career Guidance System

In today’s fast-paced gig economy, freelancers and gig workers often face challenges in navigating career growth. The lack of structured guidance makes it difficult to identify in-demand skills, map transferable expertise to emerging roles, or even decide the next best career move. With a surge in remote work and technology-driven industries, the need for personalized career advice has never been more critical.

To address this problem, I embarked on a project to build an AI-driven Skill Recommendation System that empowers gig workers by providing actionable career insights. By leveraging cutting-edge generative AI and retrieval-augmented generation (RAG) techniques, this system generates tailored recommendations based on user profiles, market trends, and income data. Here’s how I approached this exciting challenge.

Understanding the Problem

Gig workers often lack access to structured career counseling or platforms that provide:

- A clear understanding of in-demand skills in the current market.

- Insight into how their existing skills can translate into better-paying roles.

- Recommendations for upskilling or career switches based on industry trends.

While some online platforms provide generalized advice, there is a gap in delivering personalized and data-driven recommendations tailored to individual profiles and aspirations.

The Solution

The system I created combines two smart components to help gig workers make better career decisions:

- Market Insights Engine: This part of the system looks up and gathers important information about the job market, like which skills are in demand and what career paths are trending.

- Personalized Career Advisor: This part takes a user’s skills and goals and suggests the best career paths or skills to learn to move forward in their career.

Together, these components work smoothly to analyze the user’s information and provide clear, practical advice through an easy-to-use interface.

The Pipeline

1. Retrieval-Augmented Generation (RAG) Pipeline

The RAG pipeline is the backbone of the system, enabling contextual retrieval of market data and generating insightful responses. Here’s how it works:

- Document Processing: The pipeline processes large datasets of market trends and job requirements, breaking them into manageable chunks for analysis.

- Embeddings and Semantic Search: Using an embedding model, the pipeline converts text into vector representations, which are stored in ChromaDB, a high-performance vector database. This allows for efficient retrieval of relevant data based on user queries.

- Response Generation: Leveraging a generative LLM (I have used the Llama 3.2 model), the pipeline synthesizes a comprehensive response by combining retrieved information with generative capabilities.

This integration of retrieval and generation ensures that the system provides career advice backed by real-time market data, enhancing its relevance and precision.

2. Recommender Pipeline

The recommender pipeline delivers personalized career advice through four main tasks:

- Skill Mapping: Matches the user’s existing skills with in-demand job roles.

- Income Comparison: Provides a comparative analysis of income potential across suggested roles.

- Career Recommendation: Based on the skill mapping and income comparison, the best career path is chosen.

- Upskilling Recommendations: Suggests specific skills to learn, complete with links to curated resources for training.

The Language Model (LLM) pipeline is at the heart of the recommendation system, designed to generate career guidance and skill suggestions. For this purpose, we utilize the Llama 3.2 Instruct-tuned model, known for its capability to generate nuanced and contextually relevant outputs.

How It Works

The LLM pipeline begins by collecting user input, such as their skills and current income. This data is dynamically integrated into carefully crafted prompts. Significant effort has been invested in prompt engineering to ensure that the LLM comprehends the user’s context and provides insightful recommendations. We also use chain-of-thought prompting, which encourages the model to reason step-by-step, resulting in more logical and detailed outputs.

Model Access and Integration

The Llama 3.2 model is accessed via the Hugging Face API. After obtaining permissions from both Meta (the model’s creator) and Hugging Face, an API token was securely stored in a .env file. This token is used to integrate the model into the pipeline, ensuring seamless access.

Integration of LLM and RAG Pipelines

The integration of the LLM and RAG pipelines ensures that the system provides recommendations informed by both user-specific data and market-driven insights. The integration workflow is implemented as follows:

- The RAG pipeline retrieves relevant contextual information and stores it in a JSON file.

- This file is dynamically referenced in the LLM pipeline’s prompts.

- The combined output is presented to the user via a user-friendly web interface built using Gradio.

Gradio Interface

The Gradio interface allows users to input their skills and income and receive actionable career advice.

Future Work

- Evaluation Metrics: Robust evaluation methodologies will be developed to quantify the effectiveness of the recommendations.

- Fine-tuning the LLM: Future iterations will involve fine-tuning the LLM on real gig worker profiles for enhanced personalization.

- Expanded Data Sources: Incorporating more diverse and comprehensive datasets will improve the accuracy of recommendations.

Conclusion

This project demonstrates the transformative potential of generative AI in career guidance. By addressing the unique challenges faced by gig workers, this system provides a valuable resource for upskilling, career switching, and achieving financial growth.

Stay tuned for updates and enhancements! Check out the GitHub repository here:

Ethical AI at Akamai

Ethical AI at Akamai

I was truly honored to give a lightning talk on Ethical AI at Akamai Technologies in collaboration with The Mass Technology Leadership Council

Opening Words from Akamai CTO

My Talk: Ethical AI for All Stakeholders

Generative AI for Ethical Workflows

Women in AI: Liz Graham’s Inspiring Leadership

AI Literacy & The Kendall Project

Gratitude & Next Steps

Crea tu primer sitio web interactivo impulsado por IA generativa con el modelo LLaMA de Meta

body { font-family: Arial, sans-serif; line-height: 1.6; padding: 40px; max-width: 900px; margin: auto; background: #f9f9f9; }

h1, h2, h3 { color: #2c3e50; }

code, pre { background: #eee; padding: 10px; border-radius: 5px; display: block; white-space: pre-wrap; }

.note { background: #e8f5e9; padding: 10px; border-left: 4px solid #4caf50; margin: 10px 0; }

¡Hola amigas y amigos!

En este tutorial, vas a crear una página web súper sencilla donde las personas puedan escribir una pregunta y recibir una respuesta de una IA — ¡igual que con ChatGPT! Pero aquí usarás el modelo LLaMA de Meta (un modelo de IA de código abierto) a través de Hugging Face.

Vas a aprender a:

Usar un modelo de IA

Crear una página web básica

Conectar todo con código en Python

¿Qué herramientas vamos a usar?

¿Qué herramientas vamos a usar?

Antes de comenzar a escribir código, conozcamos las herramientas:

Hugging Face

Hugging Face

Imagina una biblioteca gigante de modelos de IA poderosos (como LLaMA, GPT, etc.) que puedes usar en tus proyectos. Es como el Netflix de los modelos de IA — ¡solo inicia sesión, elige uno y listo!

Flask (Python)

Flask (Python)

Es un programa ligero que convierte tu código en Python en una pequeña página web. Es como el cerebro que está detrás de escena — recibe la pregunta del usuario, se la pasa a la IA, y devuelve la respuesta.

Tutorial paso a paso

Tutorial paso a paso

Paso 1: Abre Google Colab

Paso 1: Abre Google Colab

Ve a Google Colab y abre un nuevo notebook.

Paso 2: Instala las herramientas necesarias

Paso 2: Instala las herramientas necesarias

!pip install flask flask-ngrok transformers torch pyngrok

Paso 3: Inicia sesión en Hugging Face

Paso 3: Inicia sesión en Hugging Face

- Visita https://huggingface.co

- Inicia sesión → Ve a Settings > Access Tokens

- Copia tu token y pégalo aquí:

from huggingface_hub import login HUGGING_FACE_KEY = "pega-tu-token-aquí" login(HUGGING_FACE_KEY)

Paso 4: Carga el modelo LLaMA de Meta

Paso 4: Carga el modelo LLaMA de Meta

from transformers import AutoTokenizer, AutoModelForCausalLM import torch model_name = "meta-llama/Llama-3.2-1B" tokenizer = AutoTokenizer.from_pretrained(model_name) model = AutoModelForCausalLM.from_pretrained(model_name)

Paso 5: Construyamos el backend (el cerebro del sitio web)

Paso 5: Construyamos el backend (el cerebro del sitio web)

¿Qué es el backend?

Es como el chef en un restaurante. La persona hace un pedido (una pregunta), y el backend prepara la respuesta usando IA.from flask import Flask, request, jsonify from flask_ngrok import run_with_ngrok app = Flask(__name__) run_with_ngrok(app) @app.route("/ask", methods=["POST"]) def ask(): prompt = request.json.get("prompt", "") inputs = tokenizer(prompt, return_tensors="pt") outputs = model.generate(inputs["input_ids"], max_length=100) response = tokenizer.decode(outputs[0], skip_special_tokens=True) return jsonify({"response": response})

Paso 6: Crea la página web (frontend)

Paso 6: Crea la página web (frontend)

Ahora vamos a construir la parte que las personas ven e interactúan — el frontend.%%writefile index.html <!DOCTYPE html> <html> <head><title>Pregúntale a la IA</title></head> <body> <h2>Pregúntale a la IA</h2> <textarea id="prompt" rows="4" cols="50" placeholder="Escribe tu pregunta aquí..."></textarea><br> <button onclick="askAI()">Preguntar</button> <p><strong>Respuesta:</strong> <span id="response"></span></p> <script> async function askAI() { const prompt = document.getElementById("prompt").value; const res = await fetch("/ask", { method: "POST", headers: { "Content-Type": "application/json" }, body: JSON.stringify({ prompt: prompt }) }); const data = await res.json(); document.getElementById("response").innerText = data.response; } </script> </body> </html>

Paso 7: Conecta todo y ejecuta la app

Paso 7: Conecta todo y ejecuta la app

from flask import send_from_directory @app.route('/') def serve_frontend(): return send_from_directory('.', 'index.html') app.run()

Vas a recibir un enlace como este: https://xxxxx.ngrok.io

¡Haz clic y…

¡Misión cumplida!

¡Misión cumplida!

Acabas de construir:

- Un asistente de IA funcionando con el modelo LLaMA de Meta

- Una página web personalizada

- Un backend en Python que conecta todo

¡Ya estás programando con modelos de IA reales! Bienvenida/o al mundo del desarrollo con inteligencia artificial

Using AI for Peace-Building with IFIT

On the last Monday of the year, I (Dr. Savage) had the privilege of collaborating with my lab to deliver a mini-course on prompt engineering for the Institute for Integrated Transitions (IFIT). IFIT’s remarkable work supports fragile and conflict-affected states in achieving inclusive negotiations and sustainable transitions out of war, crisis, or authoritarianism. From their role in the Colombia-FARC peace-building process to their ongoing efforts in Sudan, their impact is both inspiring and transformative.

Course Highlights

Our mini-course focused on equipping IFIT with tools to use generative AI in their peace-building initiatives. Specifically, we explored how to design surveys that can illuminate regional polarization dynamics in Sudan. Here’s what we covered:

- Creating open-ended and multiple-choice survey questions to understand the effects of tribal and ethnic affiliations on polarization.

- Using AI to iterate and refine survey questions for clarity and cultural sensitivity.

- Tailoring surveys for different populations and translating them into local languages using AI tools.

- Employing AI for A/B testing to optimize survey effectiveness.

A Team Effort

This course was a true team effort. A big thank you to Jesse Nava, our program manager, and Rafael Morales from UNAM and who leads on gov tech research. Their extensive experience creating surveys for marginalized communities and working with gang-affiliated networks added invaluable depth and expertise to the course.

Explore More

If you’re interested in learning more about how generative AI can support peace-building initiatives, we’ve made our slides available for further exploration. We hope they inspire new ways to leverage technology for positive change.

#AIforGood #PeaceBuilding #GenerativeAI #PromptEngineering

Hello World Tutorial with Meta’s Llama 3.2

Hey there, tech explorers! Ever wanted to whip up some magical AI-generated text, like having robot Shakespeares at your fingertips? Well, today’s your lucky day! We’re here to break down a piece of Python code that lets you chat with a fancy AI model and generate text like pros. No PhDs required, we promise.

First Things First: The Toolbox

Before we can talk to the AI, we need to grab some tools. Think of it like prepping for a camping trip—you need a tent (the model) and some snacks (the tokenizer). pip install transformers

This command installs the Transformers library, which is like the Swiss Army knife of AI text generation. It’s brought to you by Hugging Face (no, not the emoji—it’s a company!).

Step 1: Unlock the AI Vault

We’ll need to log in to Hugging Face to get access to their cool models. Think of it as showing your library card before borrowing books. from huggingface_hub import login login("YOUR HUGGING FACE LOGIN")

Replace "YOUR HUGGING FACE LOGIN" with your actual login token. It’s how we tell Hugging Face, “Hey, it’s us—let us in!”

Step 2: Meet the Model

Now we load the AI brain. In our case, we’re using Meta’s Llama 3.2, which sounds like a cool llama astronaut but is actually an advanced AI model. from transformers import AutoTokenizer, AutoModelForCausalLM import torch model_name = "meta-llama/Llama-3.2-1B" tokenizer = AutoTokenizer.from_pretrained(model_name) model = AutoModelForCausalLM.from_pretrained(model_name, device_map="auto")

– Tokenizer: This breaks down your input text into AI-readable gibberish.

– Model: The big brain that generates the text.

Step 3: Give It Something to Work With

Now comes the fun part: asking the AI a question or giving it a task. input_text = "Explain the concept of artificial intelligence in simple terms." inputs = tokenizer(input_text, return_tensors="pt")

– input_text: This is your prompt—what you’re asking the AI to do.

– tokenizer: It converts your input into numbers the model can understand.

Step 4: Let the Magic Happen

Here’s where the AI flexes its muscles and generates text based on your prompt. outputs = model.generate( inputs["input_ids"].to("cuda"), max_length=100, num_return_sequences=1, temperature=0.7, top_p=0.9, )

– inputs["input_ids"].to("cuda"): Sends the work to your GPU if you’ve got one.

– max_length: How long you want the AI’s response to be.

– temperature: Controls creativity.

– top_p: Controls how “risky” the word choices are.

Step 5: Ta-Da! Your Answer

Finally, we take the AI’s response and turn it back into human language. generated_text = tokenizer.decode(outputs[0], skip_special_tokens=True) print(generated_text)

skip_special_tokens=True tells the AI, “Please don’t include random weird symbols in your answer.”

So, What’s Happening Under the Hood?

Here’s a quick analogy for how this works:

- We give the AI a prompt (our input text).

- The tokenizer translates our words into numbers.

- The model (our AI brain) uses these numbers to predict the best possible next words.

- It spits out a response, which the tokenizer translates back into words.

It’s like ordering a coffee at Starbucks: we place the order, the barista makes it, and voilà—our coffee is ready!

Why Should We Care?

Generative AI APIs like this are the backbone of chatbots, creative writing tools, and even marketing copy generators. Whether we’re developers, writers, or just curious, playing with this code is a great way to dip our toes into the AI ocean.

Ready to Try It?

Copy the code, tweak the prompt, and see what kind of magic we can summon. Who knows? We might create the next big AI-powered masterpiece—or at least have some fun along the way.

Now go forth and generate!

Tutorial “Hello World” con Llama 3.2 de Meta

¡Hola, exploradores tecnológicos! ¿Alguna vez quisieron crear texto mágico generado por IA, como si tuvieran a Shakespeare robótico a su disposición? ¡Hoy es su día de suerte! Aquí les explicamos un código en Python que les permitirá chatear con un modelo de IA avanzado y generar texto como unos profesionales. Sin necesidad de doctorados, se los prometemos.

Primero lo primero: Las herramientas

Antes de poder hablar con la IA, necesitamos algunas herramientas. Piensen en esto como prepararse para un viaje de campamento: necesitan una tienda de campaña (el modelo) y algo de comida (el tokenizador). pip install transformers

Este comando instala la biblioteca Transformers, que es como la navaja suiza de la generación de texto con IA. Está desarrollada por Hugging Face (no, no es un emoji, ¡es una empresa!).

Paso 1: Acceder al mundo de la IA

Necesitamos iniciar sesión en Hugging Face para acceder a sus modelos increíbles. Es como mostrar tu tarjeta de biblioteca antes de pedir prestado un libro. from huggingface_hub import login login("TU TOKEN DE HUGGING FACE")

Reemplacen "TU TOKEN DE HUGGING FACE" con su token real. Es como decirle a Hugging Face: “¡Oye, somos nosotros, déjanos entrar!”

Paso 2: Conozcan el modelo

Ahora cargamos el cerebro de la IA. En este caso, usamos Llama 3.2 de Meta, que suena como un astronauta llama genial, pero en realidad es un modelo avanzado de IA. from transformers import AutoTokenizer, AutoModelForCausalLM import torch model_name = "meta-llama/Llama-3.2-1B" tokenizer = AutoTokenizer.from_pretrained(model_name) model = AutoModelForCausalLM.from_pretrained(model_name, device_map="auto")

– Tokenizador: Divide su texto de entrada en una especie de código comprensible para la IA.

– Modelo: El cerebro gigante que genera el texto.

Paso 3: Denle algo con qué trabajar

Ahora viene la parte divertida: hacerle una pregunta a la IA o darle una tarea. input_text = "Explica el concepto de inteligencia artificial en términos simples." inputs = tokenizer(input_text, return_tensors="pt")

– input_text: Este es su mensaje, lo que le piden a la IA.

– tokenizer: Convierte su entrada en números que el modelo puede entender.

Paso 4: Dejen que ocurra la magia

Aquí es donde la IA muestra sus músculos y genera texto basado en su mensaje. outputs = model.generate( inputs["input_ids"].to("cuda"), max_length=100, num_return_sequences=1, temperature=0.7, top_p=0.9, )

– inputs["input_ids"].to("cuda"): Envía el trabajo a su GPU si tienen una.

– max_length: La longitud máxima de la respuesta de la IA.

– temperature: Controla la creatividad.

– top_p: Controla qué tan “arriesgadas” son las palabras elegidas.

Paso 5: ¡Listo! Su respuesta

Finalmente, tomamos la respuesta de la IA y la convertimos de nuevo en lenguaje humano. generated_text = tokenizer.decode(outputs[0], skip_special_tokens=True) print(generated_text)

skip_special_tokens=True le dice a la IA: “Por favor, no incluyas símbolos raros en tu respuesta.”

¿Qué sucede detrás de escena?

Aquí una analogía rápida para entender cómo funciona:

- Le damos a la IA un mensaje (nuestro texto de entrada).

- El tokenizador traduce nuestras palabras en números.

- El modelo (el cerebro de la IA) usa estos números para predecir las mejores palabras posibles.

- Nos entrega una respuesta, que el tokenizador traduce de nuevo en palabras.

Es como pedir un café en Starbucks: haces el pedido, el barista lo prepara y voilà, ¡tu café está listo!

¿Por qué debería importarnos?

Las APIs de IA generativa como esta son la columna vertebral de los chatbots, herramientas de escritura creativa e incluso generadores de contenido para marketing. Ya sea que seamos desarrolladores, escritores o simplemente curiosos, jugar con este código es una excelente forma de sumergirnos en el mundo de la IA.

¿Listos para intentarlo?

Copien el código, ajusten el mensaje y vean qué tipo de magia pueden invocar. ¿Quién sabe? Podrían crear la próxima gran obra maestra impulsada por IA, o al menos, divertirse un poco en el proceso.

¡Ahora vayan y generen!

Using Generative AI to Create Sustainable Business Plans: A Mini-Course

We had the incredible opportunity to deliver a mini-course at the University of Sonora on how to use generative AI to craft sustainable business plans. The session was designed to empower students and budding entrepreneurs to integrate cutting-edge AI tools, such as ChatGPT, into their business planning processes. This event showcased the practical applications of AI for innovation and sustainability, offering hands-on experience and collaborative learning.

The Course in Action

The course focused on teaching participants how generative AI can assist in every stage of business planning, including:

- Brainstorming Ideas: Using AI to refine concepts, identify potential gaps, and generate creative alternatives.

- Market Research: Employing AI for customer analysis, trend identification, and competitive landscape evaluation.

- Feasibility Assessments: Exploring cost structures, revenue models, and risk mitigation strategies.

- Customer Feedback Simulation: Generating insights by simulating customer reactions and improving marketing strategies.

- SWOT Analysis: Leveraging AI to identify internal strengths and weaknesses and external opportunities and threats.

Participants were encouraged to experiment with AI tools to practice crafting mission statements, vision statements, and value propositions tailored to their sustainable business goals.

Collaborators Making a Difference

The course was a collaborative effort between three instructors, each bringing unique expertise to the table:

- Dr. Saiph Savage

A computer scientist and expert in human-centered AI, Saiph provided a technical and strategic perspective on how AI can be applied to the future of work and sustainable business practices. - Dr. Rafael Morales

Originally from Mexico City and a PhD in Political Science from UNAM, Rafael brought a nuanced understanding of how to align AI-driven business strategies with government policies. He highlighted opportunities for collaboration between businesses and governments to promote social good. - Jesse Nava

With extensive experience launching ventures for marginalized communities, including startups supporting low-income Hispanics and formerly incarcerated individuals in the U.S., Jesse shared real-world insights into how AI can help create inclusive, impactful business models.

Why Generative AI?

Generative AI, like ChatGPT, offers a unique advantage for entrepreneurs by providing accessible tools to:

- Refine business ideas and strategies.

- Perform rapid iterations to improve outcomes.

- Enhance collaboration and creativity.

- Develop sustainable and socially conscious plans.

The session emphasized the importance of human-centered design to ensure that AI tools remain inclusive, adaptable, and aligned with ethical practices.

Checkout the full slides here

In the mini-course, we also guided students through using generative AI as a powerful tool for developing business ideas. We taught them how to craft effective prompts to:

- Obtain Feedback on Business Ideas

- Generate detailed insights and suggestions to refine their business concepts.

- Identify Potential Markets

- Explore and analyze who their target audience could be based on their business idea.

- Create Social Media Content

- Develop engaging and tailored social media posts targeting their identified market to build awareness and engagement.

- Conduct Key Business Analyses

- Perform essential evaluations such as SWOT analyses (Strengths, Weaknesses, Opportunities, Threats) and break-even analyses to better understand the viability and financial dynamics of their business plans.

By leveraging generative AI in these areas, students gained hands-on experience in building and refining comprehensive business strategies effectively and creatively.

Gratitude and Looking Ahead

We want to thank the University of Sonora, José Montaño Sánchez, and Dr. Alma Brenda Leyva Carreras for the invitation to deliver this course. It was an honor to collaborate with a multidisciplinary group of students and share knowledge at the intersection of AI, business, and sustainability.

As we move forward, we aim to continue these efforts, fostering a deeper understanding of how AI can empower diverse communities and create a brighter, more sustainable future for all.

Keynote Speaker at the Mexican AI Conference

Caption: My father, me, and Mexican Professor Beto Ochoa-Ruiz (chair of the Mexican AI Conference) at MICAI in Puebla.

It was an incredible honor to be the keynote speaker at the Mexican AI Conference, a prestigious event with over 40 years of history organized by the Mexican Society for Artificial intelligence. I had the privilege of being a keynote speaker and presenting my research on designing worker-centric AI tools, and it was truly inspiring to see such a vibrant and thriving AI community in Mexico.

Conference Highlights

The conference itself was filled with fascinating talks and discussions. I especially enjoyed the presentation by Professor XX from the University of Toronto, who is pioneering AI systems to quantify and understand smell—an area of AI that I had not previously considered but found fascinating.

I also appreciated reconnecting with Professor Ricardo Baeza Yates, a distinguished researcher at Northeastern University. His work in establishing impactful AI labs in both industry and academia has been transformative, particularly in Latin America. His efforts with Yahoo Research have opened new pathways for research and innovation in the region, creating opportunities for countless researchers.

INAOE: A Unique Setting

The conference took place at the National Institute of Astrophysics, Optics, and Electronics (INAOE), a premier research institution in Mexico. Situated in a serene wooded area, INAOE is home to some stunning telescopes, blending cutting-edge technology with natural beauty. This unique setting added a special touch to the conference, enhancing the overall experience.

Caption: An overview of the speakers, participants, and organizers of the conference.

Special Moments with My Father

One of the most memorable aspects of this experience was attending the conference with my father, who has dedicated much of his career to AI and robotics. We drove together from Mexico City to Puebla, where the conference was held, and this journey gave us the unique opportunity to spend rich quality time together. Our conversations ranged from AI to personal reflections, making this trip a deeply meaningful experience for both of us.

Exploring the City of Puebla

Puebla is an impressive city, renowned for its rich history and architectural beauty. During our visit, I was particularly captivated by its churches, which showcase the Churrigueresque style. This ornate style is a fusion of local Indigenous art and Spanish Baroque, characterized by its elaborate decorations and intricate details. It stands as a testament to the cultural confluence that shaped Puebla’s identity.

Final Reflections

Overall, it was a privilege to be part of such a dynamic and supportive AI research community. The Mexican AI Conference not only provided a platform to share my research but also allowed me to engage with brilliant minds and immerse myself in the rich cultural and scientific landscape of Puebla.

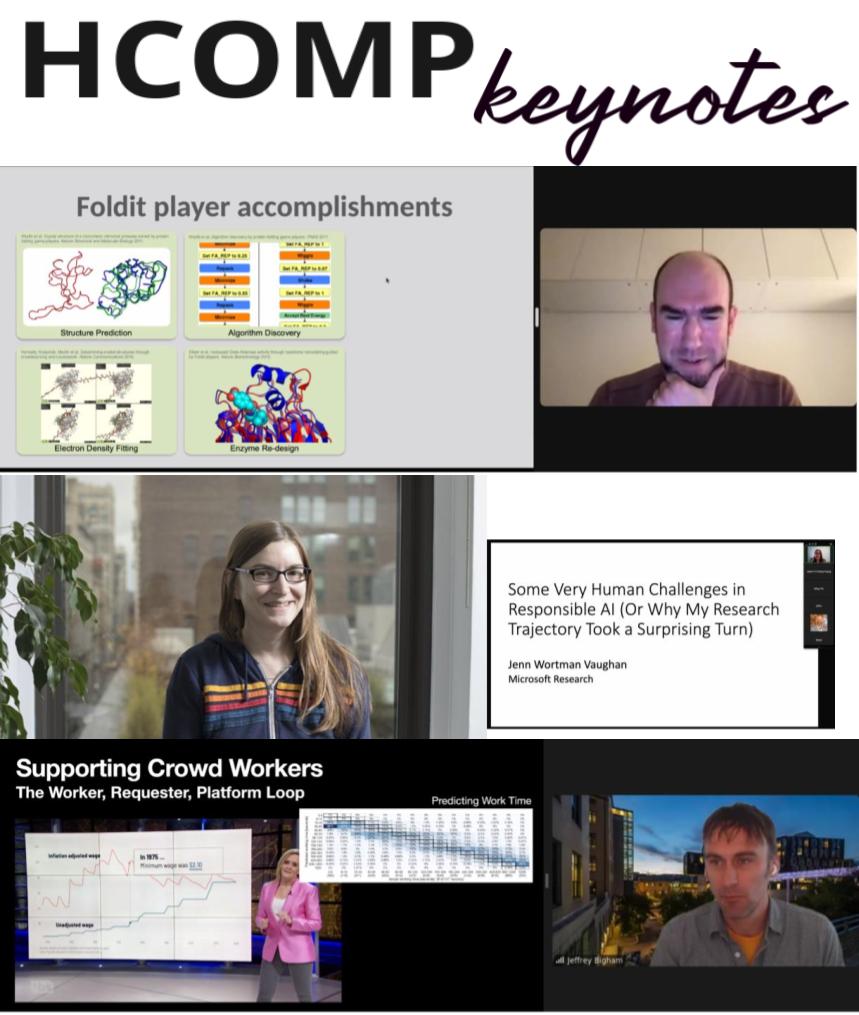

Workshop on AI Tools for Labor at the AAAI HCOMP Conference

We recently had the honor of co-organizing a workshop at the AAAI Human Computation and Crowdsourcing Conference (HCOMP) in Pittsburgh. This workshop focused on designing AI tools for the future of work and brought together diverse perspectives and innovative ideas.

Keynote Speaker: Sara Kingsley

Caption: Sara Kingsley giving her keynote at our workshop where she explained about her research on designing human centered AI for the future of work. She is especially focused on using red-teaming techniques to conduct online audits around AI in the work place, identify biases around current AI tools, and then designing AI driven interventions to address the challenges.

One of the highlights of our workshop was having Sara Kingsley as our keynote speaker. Sara is a researcher at Carnegie Mellon University and has extensive experience working at Meta and within the US Federal government, specifically in the Secretary of Labor.

In her engaging talk, Sara shared her work using red teaming—a process where experts challenge and test systems to find vulnerabilities or weaknesses—to identify problematic job ads and content related to job advertising. She explained how she applies red teaming to ensure that job ads do not propagate harmful biases or misleading information. This approach allows her to design human-centered AI tools that can create better, more equitable AI-driven futures for workers.

This type of research is critical as it helps to identify and mitigate potential biases and harms in AI systems before they impact real users. We were especially proud to note that Sara recently won the best paper award at HCOMP’24 on this very topic. Congratulations to Sara on this well-deserved recognition! We are proud to have had her as a keynote speaker in our workshop.

Co-Design Activity with Community Partners

Caption: Our research collaborator Jesse Nava in his workforce development programs for former/current prisoners.

Another unique aspect of our workshop was the co-design activity we held with workshop participants and current and former prisoners from California’s Department of Corrections and Rehabilitation. Our community partner, Jesse Nava joined us via call, making this session truly impactful.

During this co-design activity, participants proposed ideas for generative AI tools that could support the reintegration of former prisoners into the workforce. Jesse Nava provided invaluable feedback on these proposals, sharing his perspective on potential harms, biases, and areas where these tools could be improved to better serve the formally incarcerated population.

This was a unique experience as it allowed us to receive direct feedback from real-world stakeholders who would be directly affected by these AI tools. The opportunity to co-design with such engaged partners highlighted the importance of including diverse voices and lived experiences in the development process.

Closing Thoughts

We concluded the workshop with a sense of excitement and renewed commitment to continue designing the future of generative AI tools together. This collaborative approach is key to creating technologies that are inclusive, fair, and genuinely supportive of the communities they aim to serve.

Thank you to everyone who participated and contributed to making this workshop a success. We look forward to future opportunities to innovate, collaborate, and create impactful AI solutions.

Driving the Future of Work in Mexico through Artificial Intelligence: My Experience with the Global Partnership on AI (GPAI)

As an expert selected by the Mexican federal government to be part of the Global Partnership on AI (GPAI), I have had the honor of contributing to the working group on “AI for the Future of Work.” My participation in this group has been an enriching and transformative experience, especially in the context of how artificial intelligence (AI) can and should positively impact the labor market in Mexico.

During my time with GPAI, I led several key initiatives that emphasize the importance of integrating AI into the workplace. One of the most notable was securing €20,000 to fund internships focused on creating AI for workers, specifically for Mexican students. These internships not only provide development opportunities for our youth but also foster the creation of technology that can improve working conditions in our country.

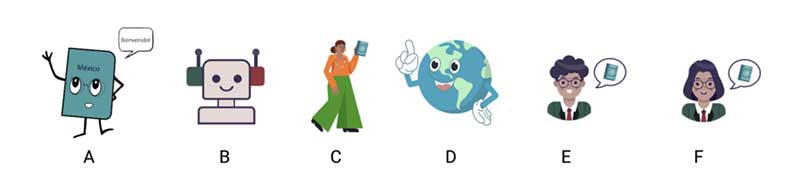

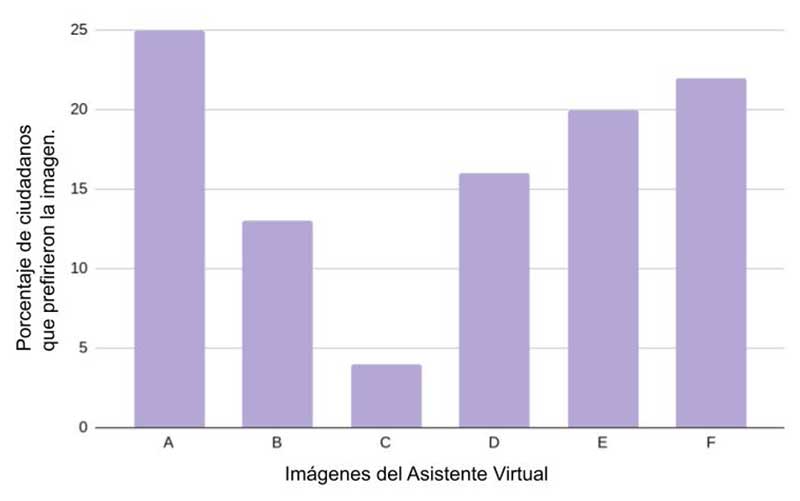

In these internships, we taught students the importance of developing human-centered artificial intelligence, an approach that prioritizes the well-being and needs of people in the design and implementation of technologies. Students learned to apply these principles while working on concrete projects, such as developing intelligent assistants for the Ministry of Foreign Affairs. These assistants were specifically designed to facilitate passport processing, improving the efficiency and accessibility of these services for Mexican citizens.

Additionally, in collaboration with INFOTEC, several UNAM students were hired as interns to implement artificial intelligence solutions in various government areas. This experience was crucial for students to apply their knowledge in a real-world setting and contribute directly to the modernization of the public sector. Collaborations between government and academia, like this one, are essential for integrating cutting-edge technologies and ensuring that Mexico remains at the forefront of AI use to improve public services.

Another significant contribution was leading the development of a new AI aimed at supporting both workers and the government. This project, developed by talented UNAM students, not only demonstrates the capabilities of our youth but also positions Mexico as a leader in the creation of labor-inclusive technology.

My work with GPAI has also allowed me to lead global studies on the impact of AI in the workplace, publishing scientific articles that have contributed to the international discussion on this crucial topic. Additionally, I have had the privilege of advising senators from the United States and Mexico on how AI can transform work, ensuring that informed decisions are made to benefit workers.

Recommendations for the Mexican Federal Government

Throughout this experience, I have developed some recommendations that I consider essential for Mexico to stay at the forefront of AI integration in the workplace:

- Creation of International Training Programs: It is essential that Mexico invests in training our citizens in the latest trends and AI technologies at a global level. This will not only improve our internal capabilities but also strengthen our position on the international stage.

- Internships in AI + GovTech: Propose the creation of internship programs that combine AI with GovTech, training future leaders at the intersection of technology and governance. This will allow for a more efficient and modern public administration.

- Strengthening the Support Network for Mexicans Abroad: The Ministry of Foreign Affairs should promote scientific and AI connections between Mexican and international universities. These collaborations will not only facilitate the exchange of knowledge but also help our nationals abroad access strong support networks and advanced technological resources.

- Promotion of Government-Academia Collaborations: It is crucial to strengthen collaborations between the government and academia to create a robust ecosystem that drives the development of new artificial intelligence technologies in Mexico. These alliances will allow young talent to integrate into projects that modernize and improve public administration, ensuring that technological innovations benefit society as a whole.

My participation in GPAI has not only been an honor but also an opportunity to positively influence the future of work in Mexico. Through these recommendations, I trust that our country can continue moving towards a future where AI is a tool for growth and the well-being of all Mexicans.

Impulsando el Futuro del Trabajo en México a través de la Inteligencia Artificial: Mi Experiencia en el Global Partnership on AI (GPAI)

Como experta seleccionada por el gobierno federal mexicano para formar parte del Global Partnership on AI (GPAI), he tenido el honor de contribuir al grupo de trabajo en “AI for the Future of Work”. Mi participación en este grupo ha sido una experiencia enriquecedora y trascendental, especialmente en el contexto de cómo la inteligencia artificial (IA) puede y debe impactar positivamente el mercado laboral en México.

Durante mi tiempo en GPAI, he liderado varias iniciativas clave que subrayan la importancia de integrar la IA en el ámbito laboral. Una de las más destacadas fue la obtención de €20,000 para financiar prácticas profesionales enfocadas en la creación de IA para obreros, dirigidas a estudiantes mexicanos. Estas prácticas no solo brindan oportunidades de desarrollo a nuestros jóvenes, sino que también fomentan la creación de tecnología que puede mejorar las condiciones laborales en nuestro país.

En estas prácticas, enseñamos a los estudiantes sobre la importancia de desarrollar inteligencia artificial centrada en los humanos, un enfoque que prioriza el bienestar y las necesidades de las personas en el diseño y la implementación de tecnologías. Los estudiantes aprendieron a aplicar estos principios mientras trabajaban en proyectos concretos, como el desarrollo de asistentes inteligentes para la Secretaría de Relaciones Exteriores. Estos asistentes fueron diseñados específicamente para facilitar los trámites de pasaporte, mejorando la eficiencia y accesibilidad de estos servicios para los ciudadanos mexicanos.

Además, en colaboración con INFOTEC, se logró que varios estudiantes de la UNAM fueran contratados como internos para implementar soluciones de inteligencia artificial en diversas áreas del gobierno. Esta experiencia fue fundamental para que los estudiantes aplicaran sus conocimientos en un entorno real y contribuyeran directamente a la modernización del sector público. Las colaboraciones entre el gobierno y la academia, como esta, son esenciales para integrar tecnologías novedosas y asegurar que México esté a la vanguardia en el uso de IA para mejorar los servicios públicos.

Otra de las contribuciones significativas fue liderar el desarrollo de una nueva IA destinada a apoyar tanto a los obreros como al gobierno. Este proyecto, desarrollado por estudiantes talentosos de la UNAM, no solo demuestra la capacidad de nuestra juventud, sino que también posiciona a México como un líder en la creación de tecnología laboralmente inclusiva.

Mi trabajo en GPAI también me ha permitido liderar estudios globales sobre el impacto de la IA en el ámbito laboral, publicando artículos científicos que han contribuido a la discusión internacional sobre este tema crucial. Además, he tenido el privilegio de asesorar a senadores de Estados Unidos y México sobre cómo la IA puede transformar el trabajo, asegurando que se tomen decisiones informadas que beneficien a los trabajadores.

Recomendaciones para el Gobierno Federal de México

A lo largo de esta experiencia, he desarrollado algunas recomendaciones que considero esenciales para que México se mantenga a la vanguardia en la integración de la IA en el trabajo:

- Creación de Programas de Capacitación Internacional: Es fundamental que México invierta en la formación de nuestros ciudadanos en las últimas tendencias y tecnologías de IA a nivel global. Esto no solo mejorará nuestras capacidades internas, sino que también fortalecerá nuestra posición en el escenario internacional.

- Internships en IA + GovTech: Proponer la creación de programas de prácticas profesionales que combinen la IA con el GovTech, capacitando a los futuros líderes en la intersección entre tecnología y gobernanza. Esto permitirá una administración pública más eficiente y adaptada a los tiempos modernos.

- Fortalecimiento de la Red de Apoyo a Mexicanos en el Exterior: La Secretaría de Relaciones Exteriores debe impulsar la conexión científica y en IA entre universidades mexicanas e internacionales. Estas colaboraciones no solo facilitarán el intercambio de conocimientos, sino que también ayudarán a nuestros connacionales en el exterior a acceder a redes de apoyo sólidas y recursos tecnológicos avanzados.

- Fomento de Colaboraciones Academia-Gobierno: Es crucial fortalecer las colaboraciones entre el gobierno y la academia para crear un ecosistema robusto que impulse el desarrollo de nuevas tecnologías de inteligencia artificial en México. Estas alianzas permitirán que el talento joven se integre en proyectos que modernicen y mejoren la administración pública, garantizando que las innovaciones tecnológicas beneficien a la sociedad en su conjunto.

Mi participación en GPAI no solo ha sido un honor, sino también una oportunidad para influir positivamente en el futuro del trabajo en México. A través de estas recomendaciones, confío en que nuestro país puede seguir avanzando hacia un futuro en el que la IA sea una herramienta para el crecimiento y el bienestar de todos los mexicanos.

Dr. Savage’s Journey with the OECD’s Global Partnership on AI (GPAI)

By Dr. Savage

I’m thrilled to share my experience as an expert with the Global Partnership on AI (GPAI), an initiative launched by the Organization for Economic Cooperation and Development (OECD). The OECD is an intergovernmental organization that involves different governments from across the world, dedicated to promoting policies that improve the economic and social well-being of people globally. This journey has been both inspiring and impactful, as I work alongside brilliant minds from around the world to tackle some of the biggest challenges and opportunities AI presents. Note that each government that is part of the OECD selects experts to represent them in GPAI, and I was honored to be selected by Mexico’s federal government to be one of their AI experts

Being a GPAI expert has empowered me to: 1) create new AI solutions focused on the future of work; (2) develop recommendations for policymakers on managing AI in the workforce; (3) and travel to different parts of the world to collaborate with other GPAI experts on creating AI technologies that benefit workers and promote positive outcomes in the workforce.

My last trip took me to Paris, where I joined forces with experts to design AI solutions tailored for unions in Latin America. In this blog post, I provide information about my trip, including insights into what GPAI is and how it operates. Stay tuned for my next blog post, where I will delve deeper into the innovation workshop held in Paris and share the exciting advancements we made there.

Why GPAI Was Created

The GPAI was set up to address the rapid advancements in AI, ensuring these technologies are developed ethically and inclusively. It aims to:

- Promote responsible AI: Ensuring AI is used for good.

- Enhance international cooperation: Sharing knowledge and best practices globally.

- Support sustainable development: Using AI to solve global issues like health and education.

- Encourage innovation: Driving advancements while managing risks.

How GPAI Works

GPAI brings together experts from various countries, chosen by their governments, to collaborate on key areas like:

- Responsible AI

- Data Governance

- The Future of Work

- Innovation and Commercialization

Dr. Savage’s Role and Contributions

I’m honored to have been named a GPAI expert by Mexico’s federal government. I’m part of the working group focusing on AI for the future of work. We’re exploring how AI impacts jobs and creating strategies to ensure it benefits workers rather than displaces them.

Mini Internships for Latin America

One of the most rewarding projects I’ve been involved in is setting up mini internships for students in Latin America, including Mexico and Costa Rica. These internships teach students about human-centered design for the future of work. We’re partnering with Universidad Nacional Autónoma de México (UNAM), Universidad de Colima, and Universidad de Costa Rica.

Students are interviewing workers to understand how they use AI at work. Based on what we learn, we’re developing new AI tools to support them better.

Innovation Workshop in Paris

As part of my GPAI role, I was invited to an innovation workshop in Paris. It was an incredible experience to meet and brainstorm with leading AI experts. The insights and ideas exchanged were invaluable, and I’m excited to bring this knowledge back to our projects in Latin America and the United States. In my next blog post, I will provide more details about the Paris innovation workshop and the exciting developments that emerged from it.

The Many Futures of Design: – A Journey through UXPA 2024

by: Viraj Upadhya

If you’re passionate about User Experience (UX) and eager to stay at the forefront of the industry, the User Experience Professionals Association (UXPA) International is the place to be. UXPA supports people who research, design, and evaluate the UX of products and services, making it a hub for bright minds in UX, CX, AI, and related fields.

I had the privilege of attending UXPA’s recent conference at the beautiful Diploma Beach Resort in Fort Lauderdale. With a theme centered on “The Many Futures of Design: Shaping UX with Futures Thinking,” the event was a melting pot of innovative ideas, engaging workshops, and vibrant networking opportunities. Here’s a detailed journey through the highlights of this incredible conference.

Conference Overview

The conference kicked off with an introduction to the theme and objectives, emphasizing AI, research methods, visualizations, and UX. From June 23rd to June 27th, 2024, attendees were treated to a mix of educational sessions, interactive workshops, and delightful activities like finding the Flamingo

Attendee Demographics

The event attracted 300-400 attendees, ranging from students and professors to corporate professionals and startup enthusiasts, aged 22 to 60. Companies like IBM, Fable, Maze, Vanguard, and the American Health Association were present, alongside sponsors like MeasuringU, K12 Edu, Cella, and Bentley.

Day 1 Conference Overview: Workshops and Sessions

The conference offered a rich variety of workshops and sessions, each providing valuable insights and practical knowledge.

Enhancing User Research with AI by Corey Lebson

The AI Revolution in UX Research

Corey Lebson’s workshop was a deep dive into the transformative potential of AI in UX research. Attendees explored generative AI tools like Chat GPT, CLAUDE, and Microsoft Co-Pilot, which are redefining how we approach user research. Lebson demonstrated how these tools can streamline the creation of test hypotheses and analyze user data with unprecedented precision.

Key Takeaways

- Generative AI Tools: Tools such as Chat GPT can generate insightful user feedback, making it easier to understand user needs and behaviors.

- AI-Specific Research Tools: Platforms like LoopPanel and User Evaluation are tailored for UX researchers, offering features that enhance data collection and analysis.

- Test Hypotheses: Lebson emphasized the importance of creating clear hypotheses to guide research, exemplified by statements like, “If the navigation used a larger font and higher-contrast color, then more users will click on the links due to its increased prominence.”

Data Visualization + UX (DVUX) for Dashboards, User Interfaces, and Presentations by Thomas Watkins

Visuals That Speak

Thomas Watkins captivated the audience with his insights into the power of data visualization in UX. He argued that sometimes, diagrams work better than traditional visualizations, especially in illustrating complex relationships.

Key Insights

- Diagrams vs. Visualizations: Diagrams can effectively show relationships and hierarchies, making complex information more digestible.

- Clarity in Communication: Using the right visual tool is crucial for effective communication, ensuring that data is not only presented but understood.

Measuring Design Impact Through a UX Metric Strategy by William Ryan

Quantifying UX Success

William Ryan’s workshop focused on the often elusive task of measuring design impact. By introducing a UX metric strategy, Ryan provided a roadmap for evaluating the effectiveness of design choices through concrete metrics.

Key Takeaways

- Learnability Metrics: Assessing how quickly users can learn to navigate a product is essential for understanding usability.

- Task Completion Times: Measuring how long it takes for users to complete tasks can highlight areas for improvement.

- User Segmentation: Differentiating between novice and expert users allows for more tailored UX strategies.

Visual Storytelling for Research Impact: UX Research Models & Frameworks by Sophia Timko

The Art of Visual Storytelling

Sophia Timko’s session on visual storytelling was a masterclass in presenting research findings. She underscored the importance of clear and compelling visuals to convey research insights effectively.

Key Takeaways

- Best Practices: Timko shared frameworks and models for visual storytelling that enhance the impact of UX research.

- Effective Communication: The session emphasized the need for visuals that not only present data but tell a story, making complex research accessible and engaging.

AI TwoWays: Enhancing Human-AI Interaction

Bridging the Communication Gap

This intriguing session delved into the dual nature of AI explanations. The focus was on how AI can enhance human understanding and trust by providing clear, contextually relevant explanations.

Key Takeaways

- User-Centric AI: Designing AI systems that dynamically explain their logic helps build user trust.

- Two-Way Communication: AI systems should not only provide answers but also seek to understand user intent, creating a more interactive and intuitive experience.

Building Connections: Trust, Culture & Engagement in Remote Teams by Lauren Schaefer

Cultivating Remote Team Excellence

Lauren Schaefer’s workshop addressed the unique challenges of leading remote design teams. Her insights into building trust and fostering engagement were particularly relevant in today’s increasingly remote work environment.

Key Practices

- Team Rituals: Regular team-building activities, such as virtual games and personality assessments, help strengthen remote teams.

- Culture of Trust: Schaefer emphasized the importance of creating a culture where team members feel valued and connected, even from afar.

Data Visualization: The Good, the Bad, and the Dark Patterns by Douglas Johns & Andrea Sanny

Navigating the Data Visualization Landscape

Douglas Johns and Andrea Sanny’s session was a journey through the landscape of data visualization, highlighting both best practices and common pitfalls.

Key Insights

- Good Practices: Effective data visualizations are clear, accessible, and honest. Annotations, appropriate use of whitespace, and consideration of accessibility are crucial.

- Dark Patterns: The session warned against misleading visualizations, which can distort data and misinform stakeholders.

Behind the Bias: Dissecting Human Shortcuts for Better Research & Designs by Lauren Schaefer

Understanding Cognitive Biases

Lauren Schaefer’s workshop explored the impact of cognitive biases on user research and design. She provided strategies for mitigating these biases to ensure more accurate and inclusive research outcomes.

Key Takeaways

- Recognizing Biases: Understanding common cognitive biases helps in designing better research protocols.

- Inclusive Research: Ensuring diverse participant pools and employing mixed research methods can mitigate the effects of bias.

Building Generative AI Features for All – Panel Discussion

Inclusive AI Design

The panel discussion on building generative AI features brought together diverse voices from Google’s product team. The panelists shared their experiences and strategies for creating inclusive and accessible AI products.

Key Insights

- Diverse User Needs: Designing for a wide range of user identities, including age, race, gender, and disability, is essential for creating inclusive products.

- Adapting Processes: The panel highlighted the importance of continuously adapting design processes to meet the evolving needs of diverse users.

Creating Healthier Team Functionality & Product Team Alignment Through Play

This interactive workshop focused on enhancing team dynamics and product team alignment through the use of play and embodied experiences. The session highlighted how incorporating playful activities can build trust and strengthen relationships within cross-functional teams, leading to more effective collaboration and alignment.

Key Insights

- Role of Play: Playful activities can break down barriers and foster trust among team members, making it easier to align on goals and overcome conflicts.

- Embodied Experiences: Engaging in physical, interactive exercises can create lasting bonds and improve team cohesion.

- Long-Term Impact: The effects of these activities can last for several months, enhancing overall team functionality and productivity.

Takeaways

- Implement interactive and playful activities in team meetings, readouts, and offsite sessions to build stronger connections and improve alignment.

- Use these activities to address and resolve conflicts, making collaboration more effective and enjoyable.

Ethical UX: What 96 Designers Taught Us About Harm

This session explored the ethical challenges faced by UX designers and the potential harm that can arise from design decisions. By analyzing insights from a survey of 96 UX and product designers, the talk aimed to foster a deeper understanding of design ethics and provide actionable steps for integrating ethical considerations into design practices.

Key Insights

- Design Ethics: Navigating the ethical landscape of UX design is crucial for avoiding harm and ensuring user-centered practices.

- Categories of Harm: Understanding different types of harm can help designers anticipate and mitigate negative impacts of their work.

- Shared Language: Developing a common language around ethical design practices can improve communication and awareness within teams.

Takeaways

- Adopt ethical design practices by identifying potential sources of harm and addressing them proactively.

- Utilize provided templates and actionable steps to integrate ethical considerations into design workflows.

Measuring Tech Savviness: Findings from 8 Years of Studies and Practical Use in UX Research

This session presented findings from a long-term study aimed at measuring tech savviness in users. The research focused on developing a reliable metric for assessing tech savviness, which helps differentiate between user abilities and interface issues.

Key Insights

- Tech Savviness Metric: A validated measure of tech savviness can provide valuable insights into user capabilities and interface usability.

- Research Approaches: The study utilized various methods, including technical activity checklists and Rasch analysis, to refine the metric and validate its effectiveness.

- Predictive Validation: The metric explained a significant portion of the variation in task completion rates, demonstrating its practical utility.

Takeaways

- Apply the tech savviness measure in UX research to better understand user capabilities and improve interface design.

- Use the findings to inform design decisions and enhance the overall user experience.

Menu Mania: What’s Wrong with Menus and How to Fix Them

This presentation addressed common issues with menu design in websites and applications. It reviewed best practices for creating effective menus, including mega menus, context menus, hamburger menus, and more, drawing from case studies of successful redesigns.

Key Insights